DocumentationFundamentals

GCP BigQuery

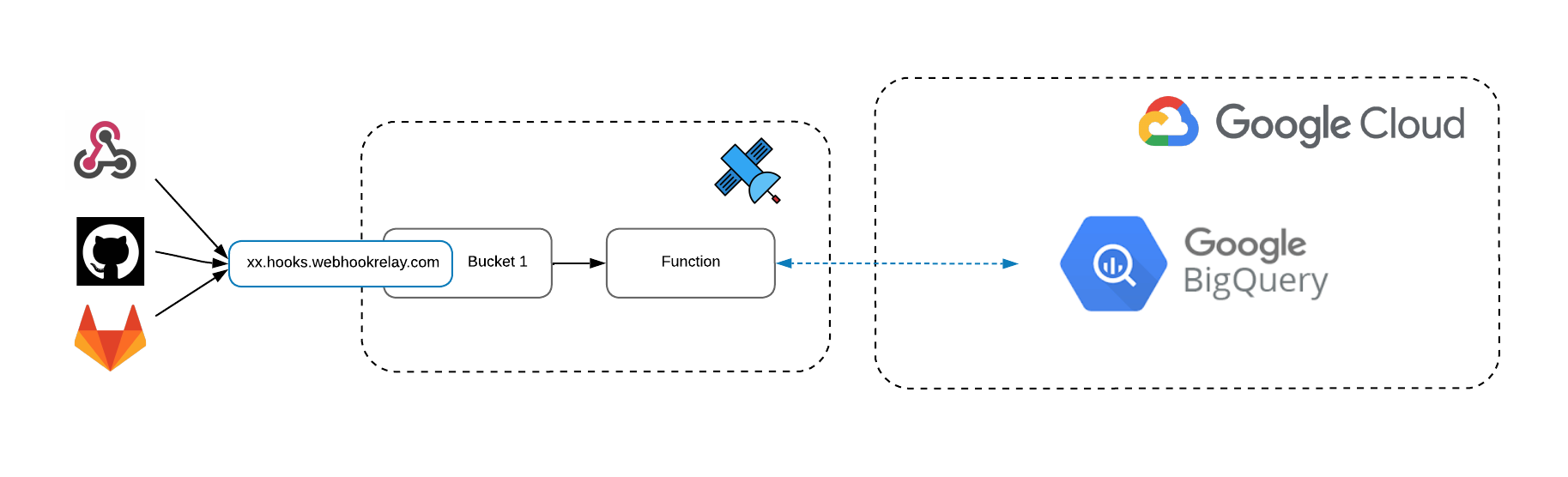

Learn to insert and stream data into BigQuery from webhooks

Prerequisites:

- Google Cloud Platform account (free trial available)

- Google Cloud project with BigQuery enabled (there's a generous free tier available for BigQuery)

- Dataset and table in BigQuery - https://cloud.google.com/bigquery/docs/tables

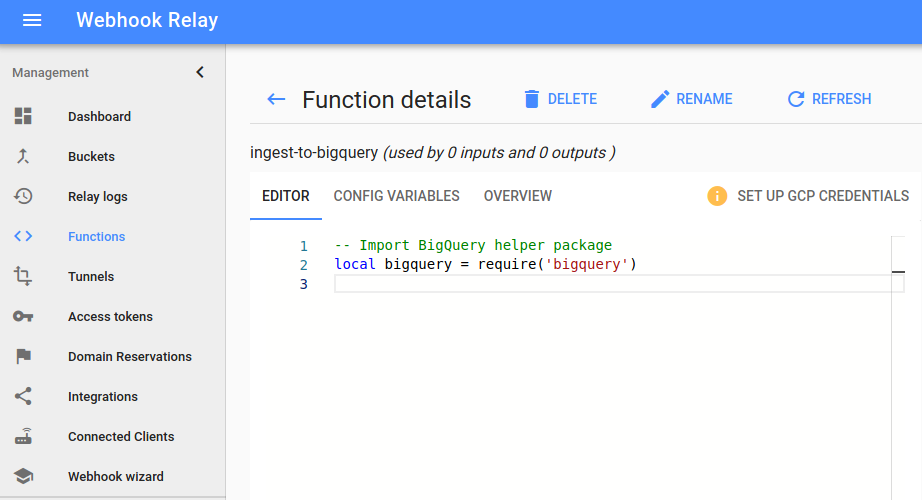

Webhook Relay provides a helper package bigquery that can stream writes into Google Cloud BigQuery. To start ingesting data from webhooks straight into your BigQuery table, create a new Function and just import 'bigquery' package:

-- Import BigQuery helper package

local bigquery = require('bigquery')

A new tab should appear that will ask you to set up credentials:

Go to that tab and it will ask you to:

- Create new service accounts with BigQuery Editor permissions

- Download the JSON file. Once you have the JSON file

- Copy & paste contents into the form and click save.

Streaming data to GCP BigQuery

-- Import BigQuery helper package

local bigquery = require('bigquery')

local json = require("json")

-- Parsing payload

local rowData, err = json.decode(r.RequestBody)

if err then error(err) end

-- Initializing BigQuery client

err = bigquery.initialize('your-project-id', 'dataset-id', 'table-id')

if err then error(err) end

-- Receiving payload:

-- {

-- "hub_user_id": "user-id-here",

-- "category": "signup",

-- "action": "click",

-- "label": "github auth"

-- }

-- Insert row:

err = bigquery.insert(rowData)

if err then error(err) end

BigQuery package API reference

At the moment there's a single client method that bigquery package exposes:

| Method name | Parameter Type | Description |

|---|---|---|

| insert(rowData) | Table | A table keyvalue that represents a row data. |

Limitations

Currently our package doesn't support nested objects. That means that a table that a structure that represents JSON such as:

{

"hub_user_id": "user-id-here",

"category": "signup",

"action": "click",

"label": "github auth",

"nested_data": {

"location": "GB",

"date": "2020-05-10"

}

}

will not be successfully inserted. Therefore, flatten the structure in the function before inserting it.

Troubleshooting

Few things to note:

- Ensure that project ID, dataset ID and table ID are there.

- BigQuery table schema is defined by the user. You don't have to write all the fields (most of the can be nullable) but if you try to write a field that doesn't exist, BigQuery will refuse to write.